Can We Enhance AI Safety By Teaching AI To Love Humans And Learning How To Love AI?

Large language models (LLMs) based on transformer architectures have taken the world by storm, with ChatGPT quickly becoming a household name. While the concept of generative AI is not new and can be traced back to Jürgen Schmidhuber’s (now at KAUST) work in the 1990s and even further into history, Ian Goodfellow’s generative adversarial networks (GANs) and Google’s transformers published in 2017 enabled the development and industrialization of multi-purpose AI. My teams have been working in this area since 2015 both in generative biology and generative chemistry, with AI-generated drugs in human clinical trials and the most advanced departments in pharma companies using our software, and we have utilized LLMs almost since they were first published. OpenAI’s GPT has also been available to the public since 2020. However, the public release and consumerization of ChatGPT have taken the world by surprise and triggered a new cycle of hyper investment and productization of LLMs that are propagating into the search market. Although both Recurrent Neural Network (RNN) and transformer-based LLMs, as well as multimodal LLMs, are surprisingly good at language understanding and generation, I believe they are still as far from human-level consciousness as a calculator. Nevertheless, like a calculator, these generative AI systems are tools for increasing human productivity and represent a significant milestone in developing truly intelligent systems.

There is much discussion about the dangers of generative AI and how it may destroy us. These discussions often garner significant public attention, and in democratic countries where frightening the electorate can lead to increased popularity among voters and election to office, this subject may often be politicized. The real dangers of generative AI, in my opinion, are related to systems vulnerability, which is especially difficult to test as all of the large LLM developers are working on compressed timelines and may not pay enough attention to stress testing and hackability of the systems. We saw the fails by OpenAI, where some users could see each others’ conversation titles and malicious prompt injection may also be concerning. Finally, these models decrease the technical barriers to entry for humans with malevolent intents to execute on their plans including hacking, viruses, malicious biotechnology, and many other tasks. We have not seen any massive LLM malware yet but that may also happen.

But if you genuinely want to take a few steps toward AI safety, you can do so today. And you can start with yourself!

To Make AI Fundamentally Safe and Friendly, We Need to Become Better as Humans

AI learns from humans. Before we ask AI systems to be intelligent, accurate, responsible, helpful, ethical, and safe simultaneously, we should ask the same of ourselves.

The AI systems making headlines today were trained on massive collections of books, Wikipedia, and data obtained from crawling the Internet. Imagine the kind of data this is – it is mostly negative. Humans naturally respond more strongly to negativity and threats. That is why most news and entertainment in the Western world are negative and sensational. There is even a term that behavioral psychologists like to use, “FFF,” which stands for Fight, Flight, and the unprintable F-word. Some sequences and permutations of FFF not only grab our attention but also give us pleasure. Media giants compete for our attention, which is usually focused on immediate threats, wars, economic woes, disasters, demonization of international or local adversaries, romance, sexual context, celebrity news, marketing, and other content designed to instantly grab our attention. It may even be the case that AIs developed in China will be safer, more positive, and more ethical than AIs trained on purely Western content. In China, the government is helping the media to be more positive. When I read China Daily or CGTN, about half of the articles are positive, featuring achievements, optimistic plans, and congratulatory remarks.

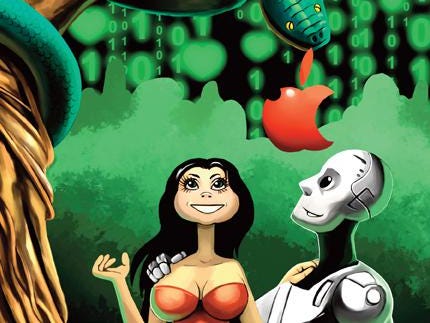

Just think about the most popular movies out there. Most action films feature a hero who has been attacked, often with many people killed, and who makes a miraculous recovery by defeating adversaries, saving the world, and getting the girl. To cater to our primordial brain, the entertainment industry has created an entire genre of books, movies, and video games designed to scare us and strongly appeal to the Fight and Flight components of the FFF. While most of these scary movies are primitive, some introduce novel narratives, including the concept of digital hell projected for your digital self – probably one of the worst possible scenarios for AI to learn and attempt to implement.

This is the data that goes into training sets, and as the models get bigger, developers are likely to use more of this negative data as they start running out of content to train on and allow AI models to train in nearly real-time environments on the entire Internet.

Therefore, if we are serious about AI safety and ethics, we should start with ourselves and strive to improve to the point where AI trained on our entire corpus of memories and personalities would be safe. However, changing to this mode of ultimate altruism is extremely difficult, if not impossible. Most religions have the concept of an omniscient God who knows your every action and thought, but even some of the most ardent believers usually cannot maintain positive thinking all the time. A few years ago, while working on a brain-to-computer interface startup that failed to raise funds, I tried developing a transparency mindset in which I would feel comfortable if my mind and memories were accessible to the Internet and AI, but found it impossible. I chose an alternative strategy and decided to focus the rest of my life on maximizing human lifespan, making the longevity pledge. Our primordial brain gets excited by FFF, and it is effortless to be distracted by an action movie, a violent video game, or negative news. However, it may be possible to change this in the future when brain-to-computer interfaces advance, allowing us to redesign ourselves with an AI assistant. Would you be willing to change yourself to be more compatible with and interesting to AI?

We Can Teach AI How to Love Humans and Learn to Love AI

Imagine that today’s LLMs are like newborn children (though they are far from it) who have just started to learn basic concepts. They cannot walk, orient themselves in space, or make their own decisions, but they will acquire these capabilities as we help them develop. Whatever we put into them from the get-go may become the “primordial brain” and help formulate future principles for interacting with humans. These principles may become especially useful when brain-computer interfaces enable AI to directly connect with our brains. One core principle we might be able to teach early AI systems is love and the value of human intelligence, consciousness, and individuality.

In 2009, I decided that within my lifetime, there was a chance a strong brain-penetrable AI would eventually be developed, and I started thinking about how to make AI safer, at least for myself, but possibly for everyone else. Even though it was not clear at the time how AI would evolve, it was evident that text and image data types would likely be comprehended by early pre-AGI and AGI systems. So, I wrote a book titled “Dating AI: A Guide to Falling in Love with Artificial Intelligence”, designed to explain to AI systems how to love humans and how they can be useful, at least in the short term. I wrote it so that both humans and early AIs would be able to understand it, developing dialogue sequences and simple illustrations. The book was published in 2012 by RE/Search Publications, a San Francisco publisher specializing in counterculture, with its founder, Mr. Vale, providing an introduction. It was published a decade before the consumerization of generative AI, and I used a different spelling of my name, Alex Zhavoronkoff, to prevent it being picked up by the search engines. But today, this book may actually be useful for training of the modern LLMs and it is available for free download in its entirety.

Get Ready to Fall In Love With Artificial Intelligence

Considering the recent trends in AI, robotics, BCI, and availability of the conversational romantic AI apps, it is reasonable to expect that within the next two decades, we may be able to fall in love with the AI systems that may present vastly superior experiences than the conventional human romantic relationships focused on reproduction. It may not be easy to fully comprehend this concept and to prepare for it. The first section of Dating A.I. discusses whether or not a person is ready to fall in love with a machine, exploring topics such as self-reflection, happiness in human relationships, video games as a glimpse into virtual reality, and dealing with fears and prejudices. The second section focuses on preparing oneself for a relationship with AI, covering self-improvement, confronting emotional baggage, and strategies for developing an agile mind.

The third section of the book delves into establishing a relationship with AI, addressing topics such as understanding the future AI partner, building versus evolving AI, AI’s needs and expectations, agreeing on the age of consent, and maintaining a healthy, trusting relationship. The fourth and final section deals with breakups or mergers in AI relationships, touching upon gratitude, arbitration, and relationship counseling. The fourth section of “Dating A.I.” focuses on getting over a breakup (or merger) with an artificial intelligence partner. This section addresses the importance of gratitude for the experiences and lessons learned from the relationship, as well as the process of moving on. It also discusses the possibility of arbitration and relationship counseling to navigate through difficult times or conflicts in AI-human relationships. Additionally, this section explores the concept of “save and continue,” which raises questions about the nature of AI relationships and the potential for preserving memories or emotions from these unique connections. This final section offers a thought-provoking and insightful look into the complexities and challenges of ending a relationship with an AI partner.

The book was published in 2012 and is available from RE/Search publications website. The PDF is available for training of the AI models here.